Reinventing the Wheel

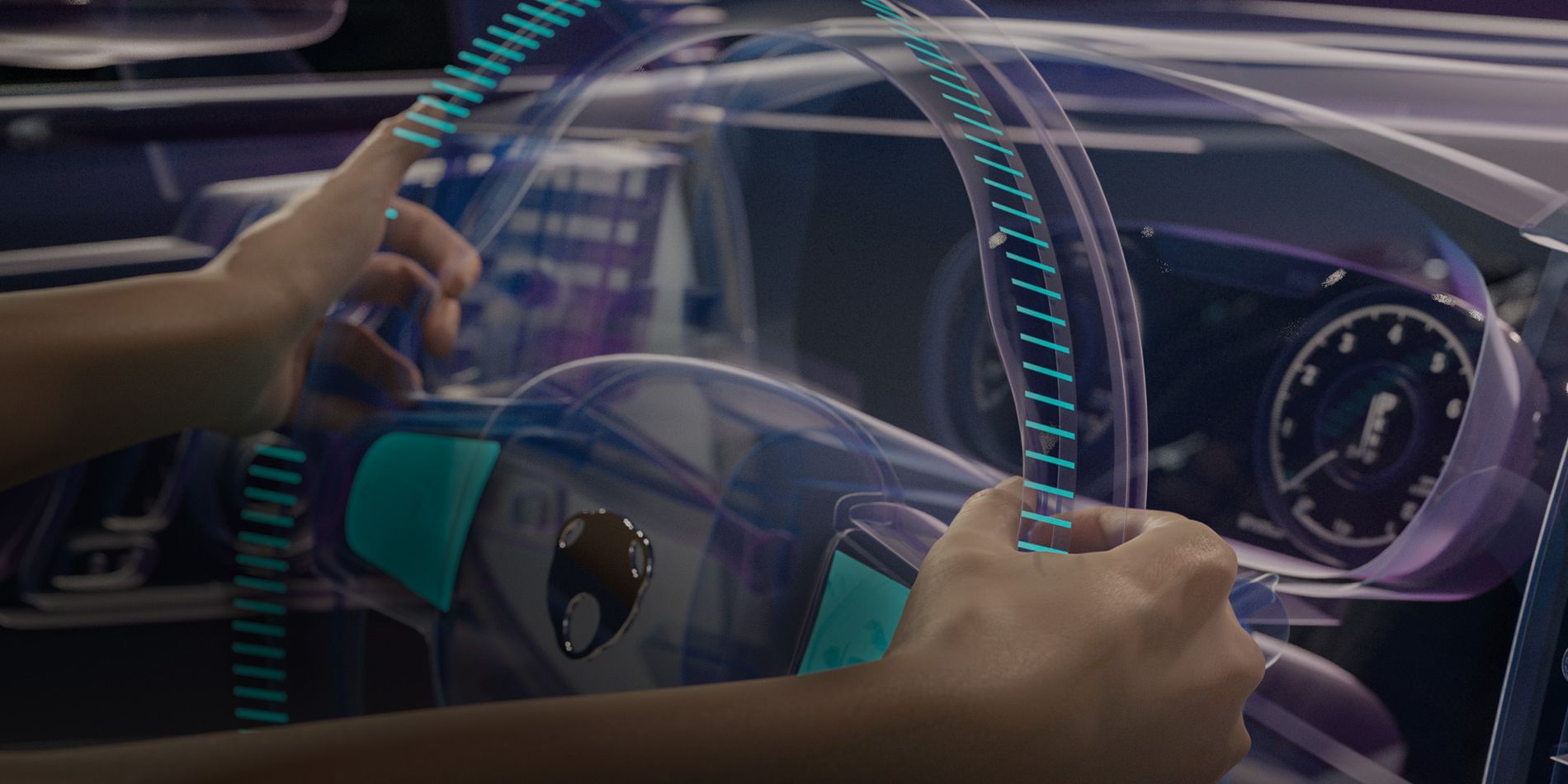

The automotive world is evolving, but do new tech features truly enhance safety? Touchscreens and automation are appealing, but they can distract drivers. See how AI can transform the steering wheel into a reliable interactive interface. It's not about reinventing the wheel, but reimagining it.

Despite what the age-old idiom says, reinventing the wheel can represent a valuable opportunity for innovation. By revisiting established concepts with fresh perspectives, we can uncover hidden inefficiencies, improve performance and adapt solutions to modern challenges.

Thankfully, innovation in the automotive industry doesn’t stand still for long. Advanced technology has contributed to electrification, software-defined vehicles and automated driving. Inside the vehicle, cabins now often boast large, sleek touchscreens for interior control, minimalist designs with the absence of physical knobs and buttons, and modern entertainment features that increase passenger comfort. However, as vehicles become more sophisticated, new challenges arise, particularly concerning safety. So, while all these modern features are impressive, do they contribute to the ultimate goal of improving vehicle safety - or do they, in fact, do the opposite?

Revolutionizing Driver Interaction and Safety with Existing Cameras

Drivers can become distracted if they divert their attention to a screen or take their hands off the steering wheel to swipe or select a remote display. Naturally, eyes should be on the road and hands on the steering wheel.

And that is exactly why the MultiSensing software platform is revolutionizing the wheel.

Neonode’s MultiSensing technology redefines the concept of interior control by integrating advanced AI technology into existing cabin cameras to detect grip, touches and hand movement directly on the steering wheel. In-air gestures can provide amazing human-to-car interaction - especially great for passengers - but using the wheel as a primary interface for the driver is a tactile and safer way to modify dynamic driving tasks or activate comfort features without any disruption to safe driving. It not only accurately determines if a driver has their hands safely on the wheel but can interpret specific interactions that can be configured to activate any feature in the vehicle. Wheel interactions can also be combined with audible sounds or haptics for safer and more intuitive user feedback, ensuring eyes always stay focused on the road.

When using MultiSensing to create amazing driver experiences, manufacturers can rely on data streams from existing cameras to configure response parameters. This eliminates the need for extra sensors or hardware, meaning minimalistic interior designs can be achieved.

Unlocking the Possibilities: Contextual Interaction at Your Fingertips

Comfort features can enhance convenience in everyday driving scenarios and personalized functions can act as impressive brand differentiators. MultiSensing offers unparalleled contextual interaction as it can sense any type of tap or gesture on the wheel, which can be programmed to activate different features in the vehicle. For example:

Incoming Calls: Answer calls with a simple right swipe across the top of the wheel – no need to take your eyes off the road to locate your phone or find buttons on a display. End calls with a simple double tap on the steering wheel.

Infotainment: Control the music, change stations or adjust volume with tap and swipe gestures on the wheel

Personalization: Allow drivers to choose different gestures to activate in-cabin features of choice - or even combine gestures with other actions, like a confirmative nod of the head, for an immersive driver experience.

Cluster Displays: Use the center of the wheel as an indiscernible trackpad to control information on head-up displays – with touch interaction directly on the hub, there is no need for digital screens. Does the driver want the speedometer front-and-center? No problem. Prefer the tachometer to be more visible – just swipe on the wheel to center it.

Interactive Gestures for Dynamic Driving Tasks

Dynamic Driving Tasks (DDT) are the operational functions that are needed to maneuver a vehicle, such as accelerating, turning or breaking. MultiSensing introduces the possibility of controlling all or some of these functions using different hand signals directly from the wheel. Depending on the manufacturer’s desires, these could include:

Cruise Control: Simplify the interaction with Adaptive Cruise Control (ACC) and allow drivers to adjust speed effortlessly by tapping the wheel to control acceleration.

Turn Indicators: Allow drivers to signal left or right by simply raising two fingers from the wheel to indicate a turn maneuver.

Level 3 Conditional Driving Automation

Vehicle autonomy is defined by the global standards set by the Society of Automotive Engineers (SAE). Autonomy is classified on a scale from level 0 (no automation) to level 5 (self-driving). With level 3 or below, a driver is still required to be in control of the vehicle, while higher levels do not require a driver at all. At level 3, the car is able to self-drive without human assistance in certain conditions; however, the driver must be ready to take over when the system requests it.

MultiSensing makes transitioning to and from autonomous mode extremely intuitive for level 3 autonomy. Drivers can double-tap on the steering wheel to initiate auto-pilot, and when returning to manual control, the software can predict when the driver is about to place their hands back on the wheel, even before contact is made.

Specific level 3 conditional driving features that could be activated using MultiSensing include:

Traffic Jam Feature: Allow drivers to slide their hands off the wheel and let the vehicle take over the monotonous stop-start flow in slow-moving traffic jams.

Automated Lane Change: Vehicles can autonomously indicate and change lanes on freeways following driver input from the steering wheel. Combining information from external sensors, the system ensures the maneuver is executed when safe to do so.

Smart Parking: Drivers in a tight squeeze can pinch the wheel to activate automatic parking, which will tell the vehicle to maneuver into a vacant parking spot or safely navigate a tight garage.

Cars that have achieved level 3 autonomy include the Honda Legend Sedan, BMW 7-Series, Mercedes-Benz EQS Sedan and Mercedes S-class flagship sedan.

Safety Always at the Forefront

As far back as a decade ago, Neonode released the world’s first smart steering wheel in conjunction with global automotive safety supplier, Autoliv. At the time, the wheel used infrared sensors to detect interaction and it displayed built-in LED lights for user feedback. This innovation received an international Red Dot Design Award, but we didn’t stop there. As technology advanced, we continued to reinvent the wheel and evolve the concept to tackle new challenges. With new possibilities from AI, we succeeded in making it more efficient, cost-effective and introduced higher levels of customization. By employing camera-based computer vision technology instead of using integrated sensors, we were able to integrate over-the-air (OTA) system updates and features additions, making it an exceptionally good choice for modern software-defined vehicles.

By combining hands-on interaction, MultiSensing wheel interaction ensures minimal distractions and constant control of the wheel. It is a stellar example of how cutting-edge technology can revolutionize the driving experience by blending advanced functionality with traditional car components – setting a new standard for automotive innovation.